Introduction

Data has become the strategic currency of modern organizations, yet delivering reliable, trustworthy data at speed remains one of the hardest challenges facing engineering teams today. Broken pipelines that fail silently, data quality issues that erode stakeholder trust, manual processes that bottleneck delivery, and compliance gaps that expose regulatory risk—these problems plague data teams across industries. DataOps emerges as the solution by applying proven Agile, DevOps, and lean manufacturing principles to the data lifecycle, treating data pipelines as production software that demands the same rigor: version control, automated testing, continuous integration and deployment, systematic monitoring, and built-in governance. The DataOps Certified Professional (DOCP) program from DevOpsSchool is designed to transform this mindset into practical capability, validating your hands-on skills in building automated, observable, quality-gated, and governed data pipelines using industry-standard tools like Airflow, Kafka, Great Expectations, Terraform, and Kubernetes.

What is DataOps

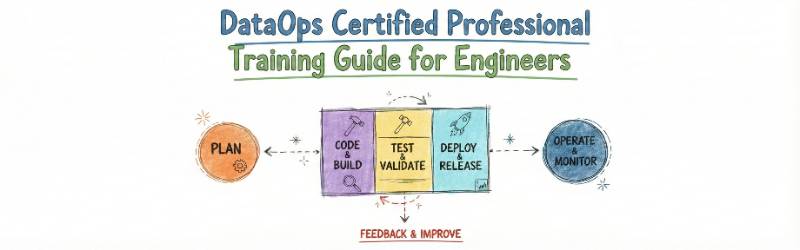

DataOps is a collaborative data management practice that improves communication, integration, and automation between people who produce/manage data and people who consume it. The goal is faster value delivery through predictable delivery and change management for data, data models, and related artifacts, with governance built in. A helpful way to think about it is: DataOps applies Agile, DevOps, and lean ideas to analytics and data engineering so you ship “data products” with the same discipline used in software delivery. The DevOpsSchool DOCP positioning also highlights automation across ingestion, transformation, and delivery; plus monitoring/observability, quality, and governance for production pipelines.

DOCP at a glance

DOCP is a DataOps-focused certification program by DevOpsSchool that aims to validate hands-on capability in DataOps principles, tools, and automated data pipeline management. The program description emphasizes building resilient pipelines, automating phases of pipeline work, using observability dashboards, and applying governance and compliance practices.

Training is offered in different formats (self-learning video, live online batches, one-to-one, and corporate options), and the course page also mentions approximate durations like ~60 hours and corporate options of about 2–3 days. DevOpsSchool notes that hands-on demos are executed on its AWS cloud and learners get step-by-step lab setup guidance (with the option to practice via AWS Free Tier or VMs).

Certifications table

| Track | Certification (Level) | Who it’s for | Prerequisites | Skills covered | Recommended order |

|---|---|---|---|---|---|

| DataOps | DataOps Certified Professional (DOCP) (Professional) | Data engineers, DevOps/cloud engineers working on data platforms; managers/architects overseeing data delivery | Basic knowledge of data engineering or DevOps is recommended | DataOps principles, automated pipelines, CI/CD for data, observability/monitoring, quality/testing, governance | 1 |

| DevOps | DevOps track (Suggested) | Engineers building CI/CD and platform automation; managers modernizing delivery | Dev fundamentals; basic Linux/Git helpful | CI/CD, automation, containers, IaC, monitoring | After DOCP if you want broader platform delivery |

| DevSecOps | DevSecOps track (Suggested) | Security engineers and platform teams shifting security left | Security basics; SDLC familiarity | Secure CI/CD, governance, compliance automation | After DOCP if governance is your focus |

| SRE | SRE track (Suggested) | SRE/ops teams running data platforms in production | Monitoring basics; Linux fundamentals | SLOs/SLIs, incident response, reliability engineering | After DOCP if reliability is your gap |

| AIOps/MLOps | AIOps/MLOps track (Suggested) | ML engineers and platform teams operationalizing models | Python + data fundamentals | Model/data pipeline automation, reproducibility, monitoring | After DOCP if ML/AI is the destination |

| FinOps | FinOps track (Suggested) | Cloud cost owners, managers, architects | Cloud billing awareness; cloud fundamentals | Cloud cost governance, optimization, chargeback/showback | After DOCP if cost + governance matters |

What it is

DataOps Certified Professional (DOCP) is positioned as a global/international certification from DevOpsSchool that validates DataOps principles, tool usage, and automated data pipeline management. It emphasizes real-world, tool-centric work across orchestration, integration, CI/CD for data, observability, data quality, and governance.

Who should take it

- Data Engineers who want production-grade orchestration, quality gates, and monitoring across pipelines.

- DevOps/Cloud Engineers who are increasingly responsible for data platforms and data pipeline reliability.

- Data Scientists/Analysts who need reproducible workflows, governed datasets, and reliable delivery.

- Engineering managers, architects, and leads who own outcomes like time-to-data, trust in dashboards, and compliance posture.

Skills you’ll gain

- DataOps foundations and agile/lean ways of working in data delivery.

- Pipeline design and automation, including common orchestration and integration tools (examples on the DOCP overview include Airflow, Kafka, StreamSets, Dagster, Databricks, and others).

- CI/CD-style automation for data workflows, plus monitoring/observability practices and dashboards.

- Data quality and testing practices (the overview explicitly calls out tools like Great Expectations and Soda).

- Infrastructure-as-code and cloud-native concepts for scalable delivery (Docker, Kubernetes, Terraform are mentioned in the overview).

- Security, governance, and compliance mindset for enterprise data assets (governance and compliance are emphasized in the overview).

Real-world projects you should be able to do after it

- Build an end-to-end data pipeline that ingests, transforms, and delivers data with automation and predictable change management.

- Implement an orchestration workflow (DAG-based pipeline) with monitoring and alerting, then operate it like a production service.

- Add data quality checks as gates so broken data does not reach downstream dashboards or ML features.

- Design a governed data delivery flow (access control + lineage/metadata practices) suitable for enterprise stakeholders.

- Run practical demos/labs using common tools referenced on the course page (e.g., Ubuntu/Vagrant and demonstration tools like Apache NiFi, StreamSets Data Collector, and Kafka Connect are listed).

Preparation plan (7–14 days / 30 days / 60 days)

7–14 days (fast-track, for experienced practitioners)

- Days 1–2: Refresh core DataOps concepts and why DataOps borrows from Agile/DevOps/lean; focus on cycle time, quality, and predictable change.

- Days 3–6: Build one pipeline end-to-end (ingest → transform → deliver), then automate repeatability and runbook steps.

- Days 7–10: Add quality checks, monitoring/observability dashboards, and basic governance controls.

- Days 11–14: Practice “production drills”: failure injection, schema drift handling, backfills, alert tuning, and stakeholder sign-off.

30 days (balanced, best for working engineers)

- Week 1: Concepts + baseline lab setup (the course page notes lab guidance and that demos run on DevOpsSchool’s AWS cloud).

- Week 2: Orchestration + integration patterns; build at least two pipelines (batch + streaming style if relevant).

- Week 3: CI/CD for data + test automation + quality gates, then document operating procedures.

- Week 4: Observability + governance; finish a capstone pipeline that your team could realistically run.

60 days (deep, best for managers or cross-skill transitions)

- Month 1: Learn the full lifecycle and deliver a working pipeline with repeatable releases and measurement.

- Month 2: Add enterprise elements (SLA/SLO thinking for data freshness, access controls, auditability, and cost awareness), then coach others with templates and standards.

Common mistakes (and how to avoid them)

- Treating DataOps as “just ETL tooling,” instead of a delivery system with quality, observability, and governance.

- Skipping monitoring/alerting until late, which increases time-to-detect and time-to-recover in pipelines.

- Not automating tests and data quality checks, which leads to silent failures and stakeholder trust loss.

- Overbuilding architecture before proving value; DataOps improves cycle time by reducing waste and WIP, so iterate in smaller batches.

- Ignoring lab readiness; the course lists baseline system requirements (Windows/Mac/Linux, at least 2GB RAM and 20GB storage).

Best next certification after this

- Same track: Go deeper into advanced DataOps patterns (streaming reliability, metadata/lineage at scale, governance automation) and pursue a higher specialization within DataOps delivery in your organization.

- Cross-track: Pair DataOps with SRE for reliability (SLOs, incident response) if your pain is outages and data freshness failures.

- Leadership: Add FinOps-style governance if cost, accountability, and unit economics are central to your platform roadmap.

Choose your path (6 learning paths)

DevOps path

Pick this if your biggest goal is consistent release automation across platforms and teams, and you want DataOps to fit into a broader CI/CD culture. DOCP still helps here because it treats data pipelines as production software that needs predictable delivery.

DevSecOps path

Pick this if regulated data, audit needs, and policy-driven controls are constant concerns. DOCP’s emphasis on governance and compliance aligns with how DevSecOps teams think about guardrails.

SRE path

Pick this if you are on call for data platforms and your problem is reliability: broken pipelines, missed SLAs, and noisy alerts. DOCP highlights observability and monitoring practices that support this reliability mindset.

AIOps/MLOps path

Pick this if the destination is AI/ML in production, where reproducibility and reliable feature pipelines decide whether models succeed. DOCP’s automation, testing, and monitoring themes are directly relevant to ML operations.

DataOps path

Pick this if you own data delivery end to end: ingestion, transformation, quality, and governed access to analytics consumers. This is the most direct fit for DOCP’s tool-centric, pipeline-focused approach.

FinOps path

Pick this if your organization wants cost transparency and control across cloud data platforms. While DOCP is not a cost certification, the operational discipline it promotes makes cost governance easier to implement.

Role → recommended certifications mapping

This mapping keeps DOCP as the DataOps anchor and then suggests how to layer adjacent tracks depending on your role.

Next certifications to take (3 options)

- Same track (DataOps depth): Advance into deeper pipeline reliability, schema management, and governance automation patterns after DOCP.

- Cross-track (SRE or DevSecOps): Add SRE if production reliability is the main pain, or DevSecOps if compliance and controls dominate.

- Leadership (FinOps): Add FinOps if your KPIs include cost visibility, chargeback/showback, and budget accountability for data platforms.

Training-cum-certification support institutions

DevOpsSchool

DOCP is presented as a global certification by DevOpsSchool focused on DataOps principles, tools, and automated data pipeline management. The program emphasizes real-world, tool-centric learning across orchestration, CI/CD for data, observability, data quality/testing, IaC, and governance. It also highlights structured assessment (practical exercises/quizzes/final exam) and a defined certification process (training → labs → exam → credential).

Cotocus

Cotocus publishes DOCP-focused content and learning guidance that explains the DOCP program structure and learning objectives at a practical level. A DOCP training document carrying Cotocus copyright also outlines hands-on projects and interview preparation support for DataOps roles. The same document highlights coverage of orchestration/automation (Airflow, Kubernetes), CI/CD in data environments, and IaC/configuration concepts for data systems.

Scmgalaxy

Scmgalaxy offers DataOps training and promotes certification-oriented learning for practitioners implementing DataOps culture in organizations. Their positioning emphasizes practical understanding of DataOps implementation rather than only theory. This can be useful if you want an additional practice route alongside DOCP’s official curriculum focus.

BestDevOps

BestDevOps offers a DataOps training course described as live, instructor-led online training and certification support. Their course page states it is led by expert trainers from DevOpsSchool and includes long-form self-paced videos plus projects/exercises as part of the learning package. It also claims certification is issued by DevOpsSchool for that program offering, along with interview and assessment support.

devsecopsschool

devsecopsschool publishes deep-dive learning content that connects DataOps work with toolchains, end-to-end pipeline build practices, and operational readiness. It’s helpful when your DOCP preparation needs stronger coverage on controls, governance, and “secure-by-default” delivery behaviors. It also fits well if you plan a cross-track move toward DevSecOps after DOCP.

sreschool

sreschool is positioned as an SRE-focused learning path option in the same ecosystem roadmap style (reliability, observability, incident readiness). This is a strong complement to DOCP if your goal is running data pipelines like production services with reliability outcomes. It’s especially relevant for engineers who are on-call for data platforms.

aiopsschool

aiopsschool is presented as a path for AIOps/MLOps-style roles where automation and AI-driven operational practices matter. It complements DOCP when you’re connecting DataOps pipelines to ML/AI workflows and want stronger operational automation coverage. It’s a practical next step if your organization is moving toward AI-ready data operations.

dataopsschool

dataopsschool publishes DOCP-oriented explainers that summarize what DOCP validates (automation, monitoring/observability, data quality/testing, IaC, security/governance). This makes it useful as a supporting knowledge base and revision source alongside the official DOCP program page. It can also help you translate the certification scope into project-ready outcomes.

finopsschool

finopsschool is positioned as a FinOps learning path in the broader roadmap ecosystem (cost + governance). It becomes relevant after DOCP when your data platform work needs cost visibility, allocation, and optimization discipline. This is especially useful for managers and platform owners responsible for cloud spend and governance.

FAQs

1) Is DOCP hard for working engineers?

It is manageable if you already understand basic data engineering or DevOps concepts, which DevOpsSchool lists as a recommended prerequisite.

2) How long does preparation typically take?

The course page references options like ~60 hours in several learning modes and corporate formats of about 2–3 days, so your prep can range from a few weeks of evenings to a concentrated bootcamp style.

3) Do I need to be a Data Engineer to start?

No, but it helps to understand either data engineering basics or DevOps basics, as that is explicitly recommended.

4) What kind of “hands-on” should I expect?

DevOpsSchool states that demos/hands-on are executed on its AWS cloud and you receive a step-by-step lab guide, with the option to practice using AWS Free Tier or VMs.

5) What tools should I be comfortable with?

The DOCP overview lists tooling areas like orchestration, monitoring/observability, data quality/testing, and IaC, and it mentions tools such as Airflow, Kafka, Great Expectations, Docker, Kubernetes, and Terraform.

6) Is this useful for managers?

Yes—DataOps is explicitly about predictable delivery and change management of data artifacts, which aligns directly with managerial KPIs like cycle time, quality, and stakeholder trust.

7) What are typical outcomes after DOCP?

The DOCP overview frames outcomes around being able to build, automate, monitor, and scale data pipelines and lead DataOps transformations.

8) What system setup do I need?

The course page lists Windows/Mac/Linux with at least 2GB RAM and 20GB storage, and it references common Linux distributions (CentOS/Redhat/Ubuntu/Fedora).

9) Can I learn without attending every live session?

DevOpsSchool says you can view presentations/notes/recordings in their LMS and even attend missed sessions in another batch within a time window, with lifetime access to learning materials.

10) Is classroom training available?

The course page states classroom training availability in cities like Bangalore, Hyderabad, Chennai, and Delhi (and possibly elsewhere with sufficient participants).

11) What’s the biggest reason people fail to benefit from DataOps training?

A common issue is staying stuck in manual steps; the course page highlights that DataOps is meant to reduce slow, error-prone development by using new tools and methodologies and by applying lean/Agile/DevOps ideas.

12) How should I sequence certifications?

Start with DOCP if your work touches data pipelines and analytics delivery, then add SRE for reliability, DevSecOps for governance/security controls, DevOps for broader automation, or FinOps for cost governance depending on your gaps.

FAQs on DOCP

1) What exactly does DOCP validate?

It is positioned to validate DataOps principles, tools, and automated data pipeline management, with emphasis on real-world practice.

2) What background is recommended before DOCP?

Basic knowledge of data engineering or DevOps concepts is recommended.

3) Does DOCP include observability and monitoring?

Yes, the overview explicitly includes monitoring/observability themes and mentions dashboards and tools in that category.

4) Does DOCP cover data quality and testing?

Yes, the overview explicitly includes data quality and testing and names tools like Great Expectations and Soda.

5) Does DOCP cover IaC and cloud-native ideas?

Yes, the overview includes infrastructure-as-code and mentions Docker, Kubernetes, and Terraform as examples.

6) Will I get a project to implement after training?

DevOpsSchool states that after training completion, participants get a real-time scenario-based project to apply learnings.

7) Is there interview preparation support?

DevOpsSchool states it provides interview preparation support and mentions an “Interview KIT” and guidance.

8) How are labs delivered?

DevOpsSchool says demos/hands-on are executed by trainers on DevOpsSchool’s AWS cloud and learners are guided to set up labs for practice.

Testimonials

“The training was very useful and interactive. Rajesh helped develop the confidence of all.” — Abhinav Gupta, Pune (5.0).

“Rajesh is very good trainer… we really liked the hands-on examples covered during this training program.” — Indrayani, India (5.0).

“Very well organized training… very helpful.” — Sumit Kulkarni, Software Engineer (5.0).

“Thanks… training was good, appreciate the knowledge…” — Vinayakumar, Project Manager, Bangalore (5.0).

Conclusion

If your organization depends on dashboards, ML features, operational analytics, or governed datasets, DataOps is no longer optional—it is the delivery discipline that makes data trustworthy and repeatable. DOCP is designed to build that discipline through practical pipeline automation, quality, observability, and governance skills that map to real production work. Use the official DOCP page for program specifics and then choose your next step based on your role: reliability (SRE), controls (DevSecOps), scale automation (DevOps), or cost governance (FinOps).