Introduction & Overview

What is Azure Percept?

Azure Percept is a retired Microsoft platform designed to simplify the development and deployment of edge AI solutions, particularly for Internet of Things (IoT) and robotics applications. It combined hardware and software to enable rapid prototyping and deployment of AI models for computer vision and speech processing at the edge. The platform integrated seamlessly with Azure cloud services, such as Azure IoT Hub, Azure Machine Learning, and Azure Cognitive Services, to support real-time inferencing and device management.

History or Background

Azure Percept was introduced by Microsoft in March 2021 as part of its Azure edge computing portfolio. The platform aimed to bridge the gap between complex AI development and practical edge deployment, targeting industries like manufacturing, retail, healthcare, and smart cities. It was designed to lower the barrier to entry for developers by offering low-code development options and pre-built AI models. However, Microsoft announced the retirement of Azure Percept DK and its associated services in March 2023, shifting focus to new edge device platforms and developer experiences. Despite its retirement, understanding Azure Percept’s architecture and use cases provides valuable insights into edge AI and RobotOps workflows.

- March 2021: Azure Percept launched with the Azure Percept Development Kit (DK) and Azure Percept Studio, targeting edge AI prototyping.

- June 2022: Expanded documentation and sample models for applications like object detection and people counting were released.

- February 2023: Firmware update for Azure Percept DK Vision and Audio components announced.

- March 2023: Official retirement of Azure Percept DK and associated Azure services, with no further support for Azure Percept Studio, OS updates, or Custom Vision integration.

Why is it Relevant in RobotOps?

RobotOps (Robotics Operations) is the practice of applying DevOps principles to robotics development, deployment, and maintenance. Azure Percept was relevant in RobotOps due to its ability to streamline AI model development, deployment, and management for robotic systems at the edge. It supported:

- Rapid Prototyping: Enabled roboticists to quickly test AI-driven features like object detection or speech recognition.

- Integration with Cloud Tools: Facilitated CI/CD pipelines and cloud-based monitoring, aligning with RobotOps’ focus on automation and scalability.

- Edge AI: Reduced latency and bandwidth usage, critical for real-time robotic applications in dynamic environments.

Although retired, Azure Percept’s principles remain relevant for understanding how edge AI integrates into modern RobotOps workflows.

Core Concepts & Terminology

Key Terms and Definitions

- Edge AI: Running AI models on edge devices to process data locally, reducing latency and cloud dependency.

- Azure Percept DK: A hardware development kit with built-in vision and audio modules for prototyping edge AI solutions.

- Azure Percept Studio: A web-based portal for managing AI models, deploying solutions, and integrating with Azure services.

- Azure IoT Hub: A cloud service for managing IoT devices and enabling secure communication between edge and cloud.

- Azure Custom Vision: A service for training and deploying custom computer vision models, integrated with Azure Percept.

- Low-Code Development: A development approach requiring minimal coding, using visual interfaces and pre-built models.

| Term | Definition |

|---|---|

| Edge AI | Running AI/ML models locally on devices like robots instead of cloud servers. |

| Inference | Using a trained AI model to make predictions in real time. |

| Azure Percept Vision | Camera kit for object detection, tracking, and anomaly detection. |

| Azure Percept Audio | Microphone kit for voice recognition, keyword spotting, and commands. |

| RobotOps | Operations framework for managing robotic systems (CI/CD, monitoring, automation). |

| Azure IoT Hub | Secure cloud gateway that connects, monitors, and manages IoT/robot devices. |

| ML Ops / CI-CD | Continuous delivery of AI/ML models to robots at the edge for updates & improvement. |

How It Fits into the RobotOps Lifecycle

The RobotOps lifecycle involves planning, development, testing, deployment, monitoring, and maintenance of robotic systems. Azure Percept aligned with this lifecycle as follows:

| Phase | Azure Percept Role |

|---|---|

| Planning | Define AI-driven robotic tasks using Azure Percept Studio’s pre-built models. |

| Development | Use low-code tools to train and customize vision/speech models for robotic perception. |

| Testing | Prototype and test AI models on Azure Percept DK with real-time inferencing. |

| Deployment | Deploy models to edge devices via Azure IoT Hub, integrated with CI/CD pipelines. |

| Monitoring | Monitor device performance and model accuracy using Azure Monitor and IoT Explorer. |

| Maintenance | Update firmware and retrain models based on data drift, managed via Azure services. |

Architecture & How It Works

Components

Azure Percept consisted of three core components:

- Azure Percept Development Kit (DK):

- Hardware with vision (Azure Percept Vision) and audio (Azure Percept Audio) modules.

- Supported 80/20 railing for flexible mounting in robotic setups.

- Included hardware accelerators for efficient AI inferencing.

- Azure Percept Studio:

- A web-based portal for model development, deployment, and device management.

- Offered pre-built AI models (e.g., object detection, people counting) and low-code interfaces.

- Azure AI and IoT Services:

- Integrated with Azure Machine Learning, Azure Custom Vision, Azure IoT Hub, and Azure Cognitive Services for model training, device management, and data analytics.

Internal Workflow

- Data Ingestion: The Azure Percept DK captures data (e.g., video streams or audio) using its vision or audio modules.

- Model Development: Developers use Azure Percept Studio to select pre-built models or train custom models with Azure Custom Vision/Speech Studio.

- Model Deployment: Models are deployed to the DK via Azure IoT Hub, enabling on-device inferencing.

- Real-Time Inferencing: The DK processes data locally, reducing latency and bandwidth usage.

- Cloud Integration: Results are sent to Azure cloud services (e.g., Azure Data Lake) for storage, analytics, or visualization.

- Monitoring and Retraining: Azure Monitor tracks device and model performance, triggering retraining if data drift occurs.

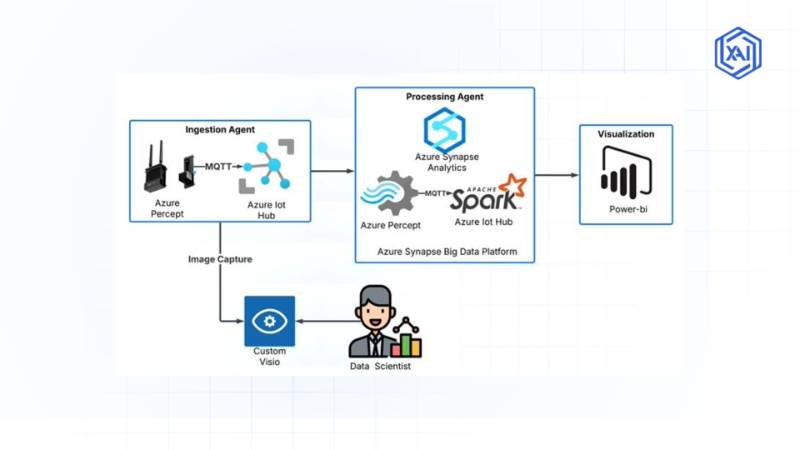

Architecture Diagram Description

The Azure Percept architecture can be visualized as a layered system:

- Edge Layer: Azure Percept DK with vision/audio modules for data capture and inferencing.

- Platform Layer: Azure Percept Studio for model management and low-code development.

- Cloud Layer: Azure IoT Hub, Azure Machine Learning, and Azure Cognitive Services for device management, model training, and analytics.

- Data Flow: Data moves from edge devices to the cloud for storage and analysis, with CI/CD pipelines automating model updates.

Diagram Description (Text-Based):

┌──────────────────────────┐

│ Azure Machine Learning │

│ (Model Training) │

└───────────▲──────────────┘

│

│ Model Deployment (CI/CD)

│

┌───────────┴──────────────┐

│ Azure IoT Hub │

│ (Device Mgmt & Telemetry)│

└───────────▲──────────────┘

│

┌────────────────┴───────────────┐

│ Azure Percept Kit │

│ Vision (Camera) + Audio (Mic) │

│ Edge AI Inference Engine │

└────────────────┬───────────────┘

│

┌──────▼───────┐

│ Robot │

│ (Decision & │

│ Action) │

└───────────────┘

Integration Points with CI/CD or Cloud Tools

- Azure DevOps/GitHub Actions: Automates model building, testing, and deployment to Azure Percept DK.

- Azure IoT Hub: Manages device connectivity and model deployment.

- Azure Monitor: Tracks device health and model performance.

- Azure Machine Learning: Supports automated retraining of models based on data drift.

Installation & Getting Started

Basic Setup or Prerequisites

- Hardware: Azure Percept DK (discontinued, but compatible with existing units pre-retirement).

- Software:

- Azure subscription (free tier available for testing).

- Azure IoT Hub and Azure Percept Studio access (pre-retirement).

- Development environment (e.g., Visual Studio Code for advanced scripting).

- Connectivity: Wi-Fi or Ethernet for cloud communication.

- Firmware: Latest firmware update (February 2023) for DK Vision and Audio modules.

Hands-On: Step-by-Step Beginner-Friendly Setup Guide

- Set Up Azure Subscription:

- Sign up at azure.com for a free account.

- Create an Azure IoT Hub instance in the Azure portal.

- Configure Azure Percept DK:

- Connect the DK to power and a monitor via HDMI.

- Configure Wi-Fi/Ethernet using the device’s setup interface.

- Update firmware via the Azure Percept Studio portal (pre-retirement).

- Access Azure Percept Studio:

- Log in to Azure Percept Studio (pre-retirement access).

- Register the DK using its device ID and IoT Hub connection string.

- Deploy a Sample Model:

- In Azure Percept Studio, select a pre-built model (e.g., people detection).

- Deploy the model to the DK via IoT Hub.

- Stream video from the DK to verify inferencing (use Azure IoT Explorer for telemetry).

- Test and Monitor:

- Use Azure Monitor to track device performance.

- View inferencing results in Azure Percept Studio or Azure Data Lake.

Code Snippet (Sample IoT Hub Connection):

# Configure IoT Hub connection on Azure Percept DK

az iot hub device-identity create --hub-name <YourIoTHubName> --device-id <YourDeviceID>

az iot hub device-identity connection-string show --device-id <YourDeviceID> --hub-name <YourIoTHubName>

Real-World Use Cases

- Retail: Shelf Analytics:

- Scenario: A retail store uses Azure Percept to monitor shelf stock levels.

- Implementation: The DK’s vision module detects empty shelves using a pre-built object detection model. Data is sent to Azure Data Lake for inventory analysis.

- Industry Benefit: Reduces stockouts and improves customer satisfaction.

- Manufacturing: Quality Control:

- Scenario: A factory deploys Azure Percept to inspect products for defects.

- Implementation: Custom vision models trained in Azure Custom Vision identify defects in real-time, integrated with robotic arms for automated sorting.

- Industry Benefit: Enhances production efficiency and reduces manual inspection costs.

- Smart Cities: People Counting:

- Scenario: A city uses Azure Percept for crowd monitoring in public spaces.

- Implementation: The open-source people counting solution tracks zone entry/exit events, with data egressed to Azure Data Lake for analytics.

- Industry Benefit: Improves urban planning and safety.

- Healthcare: Voice-Controlled Assistance:

- Scenario: A hospital uses Azure Percept Audio for voice-activated patient care systems.

- Implementation: Pre-built speech models enable voice commands for controlling medical devices, integrated with Azure IoT Hub for device management.

- Industry Benefit: Enhances patient experience and staff efficiency.

Benefits & Limitations

Key Advantages

- Low-Code Development: Enabled non-experts to build AI models with minimal coding.

- Seamless Integration: Connected with Azure cloud services for scalable RobotOps workflows.

- Real-Time Inferencing: Supported low-latency processing for time-sensitive robotic tasks.

- Hardware Acceleration: Optimized AI performance on edge devices.

Common Challenges or Limitations

- Retirement: No longer supported after March 2023, limiting access to updates and services.

- Hardware Dependency: Required specific Azure Percept DK hardware, which is discontinued.

- Learning Curve: Despite low-code options, advanced customization required familiarity with Azure services.

- Scalability: Limited to specific use cases compared to general-purpose edge platforms.

Best Practices & Recommendations

Security Tips

- Use Azure IoT Hub’s secure communication protocols (e.g., TLS) for device-cloud interactions.

- Implement role-based access control (RBAC) in Azure Percept Studio to restrict access.

- Regularly update firmware to address security vulnerabilities (pre-retirement).

Performance

- Optimize models for edge inferencing by reducing model size in Azure Custom Vision.

- Use Azure Monitor to track latency and adjust resources dynamically.

Maintenance

- Monitor data drift using Azure Machine Learning to trigger model retraining.

- Maintain a CI/CD pipeline with Azure DevOps for automated model updates.

Compliance Alignment

- Ensure GDPR/HIPAA compliance by processing sensitive data on-device to minimize cloud exposure.

- Use Azure Policy to enforce compliance standards across devices.

Automation Ideas

- Automate model deployment using GitHub Actions for Azure.

- Set up event-driven triggers in Azure Data Factory for data processing pipelines.

Comparison with Alternatives

| Feature | Azure Percept | AWS IoT Greengrass | Google Edge TPU |

|---|---|---|---|

| Edge AI Support | Vision and speech models | General-purpose ML models | Vision-focused models |

| Cloud Integration | Azure IoT Hub, Machine Learning | AWS IoT Core, SageMaker | Google Cloud AI, IoT Core |

| Low-Code Options | Yes (Azure Percept Studio) | Limited (requires coding) | Limited (requires TensorFlow expertise) |

| Hardware | Azure Percept DK (discontinued) | Custom hardware | Coral Dev Board/USB Accelerator |

| CI/CD Integration | Azure DevOps, GitHub Actions | AWS CodePipeline | Custom CI/CD pipelines |

| Status | Retired (March 2023) | Active | Active |

When to Choose Azure Percept

- Choose Azure Percept: For existing deployments (pre-retirement) in Azure-centric environments needing low-code edge AI for robotics.

- Choose Alternatives: AWS IoT Greengrass for broader ML support or Google Edge TPU for high-performance vision tasks.

Conclusion

Azure Percept was a pioneering platform for edge AI in RobotOps, offering a streamlined approach to developing and deploying AI-driven robotic solutions. While its retirement in March 2023 limits its use in new projects, its architecture and integration with Azure services provide valuable lessons for modern RobotOps workflows. Future trends in edge AI and RobotOps will likely focus on more flexible hardware-agnostic platforms and advanced automation. Developers can explore Azure’s broader ecosystem, such as Azure IoT Edge and Azure Machine Learning, for similar capabilities.