Introduction & Overview

Edge AI Inference is revolutionizing the way robots operate by enabling real-time decision-making at the network’s edge. In the context of RobotOps (Robotics Operations), it empowers robotic systems to process data locally, reducing latency, enhancing privacy, and improving operational efficiency. This tutorial provides a comprehensive guide to understanding and implementing Edge AI Inference within RobotOps, covering its core concepts, architecture, setup, use cases, benefits, limitations, best practices, and comparisons with alternative approaches.

What is Edge AI Inference?

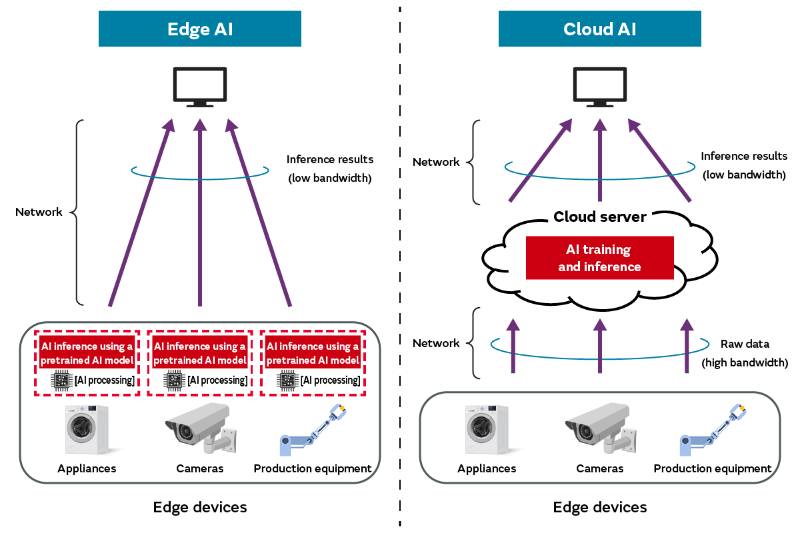

Edge AI Inference refers to the deployment and execution of pre-trained machine learning (ML) models on edge devices, such as robots, IoT devices, or edge servers, to make real-time predictions or decisions without relying on constant cloud connectivity. Unlike cloud-based AI, where data is sent to centralized servers for processing, Edge AI Inference processes data locally, closer to the data source, enabling faster responses and reduced bandwidth usage.

History or Background

The evolution of Edge AI Inference is tied to advancements in edge computing, IoT, and AI efficiency:

- Early 2000s: The rise of IoT devices generated massive data volumes, overwhelming centralized cloud systems and highlighting the need for localized processing.

- 2010s: Advances in hardware, such as GPUs, TPUs, and custom ASICs (e.g., Google’s Tensor Processing Unit), made it feasible to run complex AI models on resource-constrained devices.

- 2014–Present: The term “Edge AI” emerged as a field, with significant research focusing on optimizing ML models for edge deployment. Frameworks like TensorFlow Lite and OpenVINO were developed to support efficient inference on edge devices.

- 2020s: The proliferation of 5G and edge computing infrastructure further accelerated Edge AI adoption, particularly in robotics, autonomous vehicles, and smart manufacturing.

This historical context underscores the shift from centralized cloud AI to distributed edge-based inference, driven by the need for low-latency, secure, and scalable solutions.

Why is it Relevant in RobotOps?

RobotOps, the practice of managing and operating robotic systems, relies on seamless integration of hardware, software, and AI to ensure robots perform reliably in dynamic environments. Edge AI Inference is critical in RobotOps because it:

- Reduces Latency: Enables robots to make split-second decisions (e.g., obstacle avoidance in autonomous navigation).

- Enhances Privacy: Processes sensitive data locally, crucial for applications like healthcare robotics.

- Improves Reliability: Allows robots to operate in disconnected or low-bandwidth environments, such as remote warehouses or disaster zones.

- Optimizes Resources: Minimizes cloud dependency, reducing bandwidth and energy costs in large-scale robotic deployments.

Core Concepts & Terminology

Key Terms and Definitions

- Edge Device: A hardware component (e.g., robot, sensor, or microcontroller) capable of local data processing and inference.

- Inference: The process of using a trained ML model to make predictions or decisions based on new data.

- Model Optimization: Techniques like quantization, pruning, and knowledge distillation to make ML models lightweight for edge deployment.

- AI Accelerators: Specialized hardware (e.g., GPUs, TPUs, NPUs) designed to accelerate AI computations.

- RobotOps Lifecycle: The end-to-end process of designing, deploying, monitoring, and maintaining robotic systems.

| Term | Definition | Example in RobotOps |

|---|---|---|

| Inference | Using a trained model to make predictions | Robot detects if an object is a box or human |

| Edge Device | Hardware running inference locally | NVIDIA Jetson, Raspberry Pi |

| Model Optimization | Techniques to shrink AI models for edge hardware | Quantization, Pruning |

| Latency | Time delay between input & inference output | Robot arm deciding within 50ms |

| On-Device Learning | Updating models partially on the edge | A robot adapting to new environments |

| MLOps / RobotOps | Applying DevOps to AI in robotics | CI/CD for deploying AI models on robots |

How It Fits into the RobotOps Lifecycle

Edge AI Inference integrates into the RobotOps lifecycle at multiple stages:

- Development: ML models are trained in the cloud and optimized for edge deployment.

- Deployment: Models are deployed to robotic edge devices using containerization or orchestration tools.

- Operation: Robots perform real-time inference to execute tasks like navigation or object detection.

- Monitoring & Maintenance: Feedback loops collect data from edge devices to retrain or fine-tune models, often using federated learning.

Architecture & How It Works

Components and Internal Workflow

Edge AI Inference systems in RobotOps consist of four main components:

- Edge Devices: Robots or sensors that collect data (e.g., cameras, LIDAR).

- AI Models: Pre-trained, optimized ML models (e.g., CNNs for object detection) deployed on edge devices.

- Hardware Accelerators: Specialized processors (e.g., NVIDIA Jetson, Google Coral TPU) for efficient inference.

- Software Frameworks: Tools like TensorFlow Lite, ONNX Runtime, or NVIDIA Isaac ROS for model deployment and inference.

Workflow:

- Data is captured by edge device sensors (e.g., a robot’s camera captures an image).

- The data is preprocessed locally (e.g., resizing, normalization).

- The optimized ML model performs inference to generate predictions (e.g., identifying an obstacle).

- The robot acts on the prediction (e.g., adjusting its path).

- Optional feedback is sent to the cloud for model retraining.

Architecture Diagram

The architecture can be visualized as a layered system:

- Edge Layer: Robots with sensors and AI accelerators perform local inference.

- Fog Layer: Intermediate nodes (e.g., local servers) handle data aggregation or additional processing.

- Cloud Layer: Centralized servers for model training, updates, and analytics.

┌─────────────────────────────┐

│ Cloud / Data Center │

│ - Model Training │

│ - Dataset Storage │

│ - CI/CD Pipelines │

└─────────────┬───────────────┘

│

▼

┌─────────────────────────────┐

│ RobotOps Middleware │

│ - Model Packaging (ONNX) │

│ - Deployment Automation │

│ - Monitoring Integration │

└─────────────┬───────────────┘

│

▼

┌─────────────────────────────┐

│ Edge Device / Robot │

│ - Inference Runtime │

│ - AI Accelerator (GPU/TPU) │

│ - ROS2 Integration │

│ - Local Decision Making │

└─────────────────────────────┘

Integration Points with CI/CD or Cloud Tools

- CI/CD Pipelines: Tools like Jenkins or GitLab CI/CD automate model deployment to edge devices.

- Containerization: Docker or Podman packages models and dependencies for consistent deployment.

- Orchestration: Kubernetes or KubeEdge manages distributed edge deployments.

- Cloud Feedback: AWS IoT Greengrass or Azure IoT Edge facilitates model updates and telemetry.

Installation & Getting Started

Basic Setup or Prerequisites

- Hardware: Edge device (e.g., NVIDIA Jetson Nano, Raspberry Pi with Coral TPU).

- Software: Python 3.8+, TensorFlow Lite or ONNX Runtime, Docker for containerization.

- Network: Optional cloud connectivity for model updates.

- Dependencies: Install required libraries (e.g.,

numpy,opencv-python).

Hands-On: Step-by-Step Beginner-Friendly Setup Guide

This guide sets up a simple Edge AI Inference pipeline on a NVIDIA Jetson Nano for object detection in a RobotOps scenario.

- Set Up the Jetson Nano:

- Flash the Jetson Nano with the latest JetPack OS.

- Update the system:

sudo apt update && sudo apt upgrade.

- Install Dependencies:

sudo apt install python3-pip

pip3 install numpy opencv-python tensorflow3. Install TensorFlow Lite:

pip3 install tflite-runtime4. Download a Pre-Trained Model:

- Obtain a TFLite model (e.g., MobileNet SSD for object detection) from TensorFlow Hub.

wget https://tfhub.dev/tensorflow/ssd_mobilenet_v2/2 -O model.tflite5. Write Inference Code:

import numpy as np

import cv2

import tflite_runtime.interpreter as tflite

# Load TFLite model

interpreter = tflite.Interpreter(model_path="model.tflite")

interpreter.allocate_tensors()

# Get input/output details

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# Capture video from robot's camera

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

if not ret:

break

# Preprocess image

input_shape = input_details[0]['shape']

frame_resized = cv2.resize(frame, (input_shape[1], input_shape[2]))

input_data = np.expand_dims(frame_resized, axis=0).astype(np.uint8)

# Perform inference

interpreter.set_tensor(input_details[0]['index'], input_data)

interpreter.invoke()

boxes = interpreter.get_tensor(output_details[0]['index'])

# Process results (e.g., draw bounding boxes)

for box in boxes[0]:

y, x, h, w = box

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

cv2.imshow('Object Detection', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()6. Run the Inference:

python3 inference.py7. Integrate with RobotOps:

- Package the code in a Docker container:

FROM nvcr.io/nvidia/l4t-base:r35.2.1

RUN pip3 install numpy opencv-python tflite-runtime

COPY inference.py model.tflite /app/

WORKDIR /app

CMD ["python3", "inference.py"]- Deploy using KubeEdge for orchestration.

Real-World Use Cases

- Autonomous Warehouse Robots:

- Scenario: Robots navigate warehouses to pick and place items.

- Application: Edge AI Inference runs object detection models to identify items and obstacles, enabling real-time navigation.

- Industry: Logistics.

- Example: Amazon’s Kiva robots use edge inference for efficient inventory management.

- Healthcare Robotics:

- Scenario: Surgical robots assist in minimally invasive procedures.

- Application: Edge AI processes real-time imaging data to guide robotic arms, ensuring precision without cloud latency.

- Industry: Healthcare.

- Example: Intuitive Surgical’s da Vinci system uses edge inference for real-time decision-making.

- Agricultural Drones:

- Scenario: Drones monitor crop health in remote fields.

- Application: Edge AI analyzes multispectral images to detect pests or nutrient deficiencies, enabling immediate action.

- Industry: Agriculture.

- Example: DJI drones with edge AI for precision farming.

- Industrial Inspection Robots:

- Scenario: Robots inspect pipelines or machinery for defects.

- Application: Edge AI performs anomaly detection on sensor data, flagging issues instantly.

- Industry: Manufacturing.

- Example: GE’s inspection robots use edge inference for real-time fault detection.

Benefits & Limitations

Key Advantages

- Low Latency: Processes data in milliseconds, critical for real-time robotic tasks.

- Enhanced Privacy: Local data processing reduces the risk of data breaches.

- Offline Capability: Operates in disconnected environments, ideal for remote operations.

- Energy Efficiency: Reduces data transmission, lowering power consumption.

Common Challenges or Limitations

- Resource Constraints: Edge devices have limited compute and memory, restricting model complexity.

- Model Updates: Updating models on distributed devices is challenging without robust orchestration.

- Heterogeneity: Diverse hardware architectures complicate deployment and scalability.

- Security Risks: Edge devices are vulnerable to physical tampering or cyberattacks.

Best Practices & Recommendations

Security Tips

- Use secure boot and trusted execution environments (e.g., ARM TrustZone) to protect models.

- Encrypt data at rest and in transit on edge devices.

- Implement blockchain-based trust mechanisms for secure model updates.

Performance

- Optimize models using quantization and pruning to reduce size and latency.

- Leverage hardware accelerators like NVIDIA Jetson or Google Coral TPU.

- Use lightweight frameworks like TensorFlow Lite or NCNN for efficient inference.

Maintenance

- Implement automated monitoring with tools like OpenTelemetry for performance tracking.

- Use federated learning for model updates without transferring raw data.

- Schedule regular model retraining based on edge feedback.

Compliance Alignment

- Ensure compliance with GDPR or HIPAA for data privacy in healthcare or consumer robotics.

- Document edge data processing for audit trails.

Automation Ideas

- Automate model deployment with CI/CD pipelines using Jenkins or GitLab.

- Use KubeEdge for orchestration across distributed robotic fleets.

Comparison with Alternatives

| Aspect | Edge AI Inference | Cloud AI Inference | Fog AI Inference |

|---|---|---|---|

| Latency | Low (milliseconds) | High (seconds due to network) | Moderate (local servers) |

| Privacy | High (local processing) | Low (data sent to cloud) | Moderate (local server processing) |

| Scalability | High (distributed devices) | High (cloud resources) | Moderate (limited by fog nodes) |

| Compute Power | Limited (edge hardware) | High (cloud servers) | Moderate (local servers) |

| Use Case Suitability | Real-time, offline tasks (e.g., robotics) | Large-scale analytics | Hybrid processing |

When to Choose Edge AI Inference

- Choose Edge AI: For real-time, privacy-sensitive, or offline robotic applications (e.g., autonomous navigation, healthcare robotics).

- Choose Cloud AI: For training large models or processing massive datasets.

- Choose Fog AI: For scenarios requiring intermediate processing with limited cloud dependency.

Conclusion

Edge AI Inference is a game-changer for RobotOps, enabling robots to make intelligent, real-time decisions in diverse environments. Its ability to reduce latency, enhance privacy, and operate offline makes it indispensable for modern robotic systems. However, challenges like resource constraints and security require careful planning and optimization. By following best practices and leveraging tools like TensorFlow Lite and KubeEdge, RobotOps teams can unlock the full potential of Edge AI Inference.

Future Trends

- Federated Learning: Decentralized model training across edge devices.

- 5G Integration: Enhanced connectivity for hybrid edge-cloud systems.

- Advanced Hardware: Next-generation AI accelerators for even faster inference.

Next Steps

- Explore frameworks like NVIDIA Isaac ROS for robotics-specific Edge AI.

- Experiment with model optimization techniques like quantization.

- Join communities like the ROS (Robot Operating System) forum for collaboration.

Resources

- TensorFlow Lite Documentation

- NVIDIA Isaac ROS

- ROS Community