Introduction & Overview

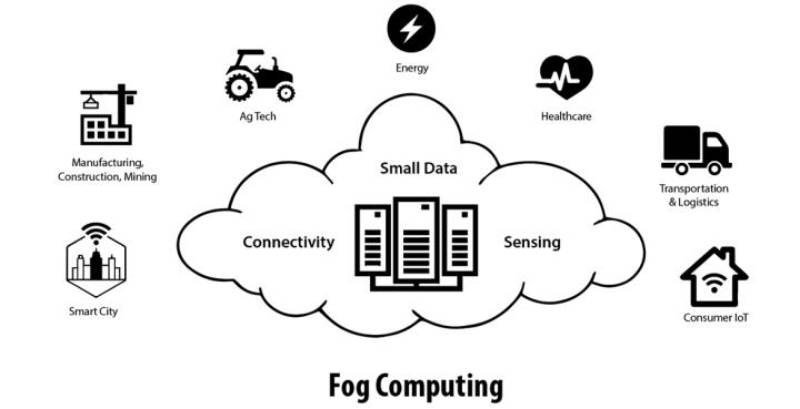

What is Fog Computing?

Fog computing is a decentralized computing paradigm that extends cloud computing capabilities to the edge of the network, closer to where data is generated and consumed. It acts as an intermediary layer between end devices (like IoT sensors or robots) and centralized cloud data centers, enabling faster data processing, reduced latency, and enhanced efficiency for real-time applications. Introduced by Cisco in 2012, fog computing addresses the limitations of cloud-only architectures by distributing computation, storage, and networking services across edge devices and fog nodes.

History or Background

Fog computing emerged as a response to the challenges posed by the rapid growth of Internet of Things (IoT) devices and the need for low-latency, real-time data processing. Below is a detailed timeline of its evolution:

- 2012: Cisco coined the term “fog computing” to describe a model that brings cloud-like capabilities to the network edge, addressing latency and bandwidth issues in IoT applications.

- 2015: The OpenFog Consortium (now part of the Industry IoT Consortium) was formed by Cisco, Microsoft, Intel, and others to standardize fog computing architectures and promote adoption.

- 2017: The OpenFog Consortium released the OpenFog Reference Architecture, providing a framework for fog computing in IoT and related fields.

- 2019–2022: Fog computing gained traction in industries like manufacturing, smart cities, and autonomous vehicles, with advancements in edge AI and 5G integration.

- 2025: Fog computing continues to evolve with increased adoption in RobotOps, driven by the need for real-time automation and orchestration in robotics workflows.

Fog computing builds on earlier concepts like edge computing but introduces a hierarchical, distributed architecture to bridge the gap between edge devices and the cloud, making it particularly suited for robotics and automation.

Why is it Relevant in RobotOps?

RobotOps, or Robotics Operations, refers to the application of DevOps principles to the development, deployment, and management of robotic systems. Fog computing is critical in RobotOps for the following reasons:

- Low Latency: Robots often require millisecond-level response times for tasks like navigation or obstacle avoidance, which fog computing enables by processing data locally.

- Scalability: RobotOps involves managing fleets of robots, and fog computing supports distributed processing to handle large-scale deployments.

- Resilience: By decentralizing computation, fog computing ensures robots can operate offline or during network disruptions, critical for industrial or remote environments.

- Data Efficiency: Robots generate vast amounts of sensor data, and fog computing filters and preprocesses this data locally, reducing cloud bandwidth usage.

Core Concepts & Terminology

Key Terms and Definitions

Below is a table of key fog computing terms relevant to RobotOps:

| Term | Definition |

|---|---|

| Fog Node | A physical or virtual device (e.g., router, gateway, or server) that processes data at the network edge. |

| Edge Device | IoT devices or robots generating data, such as sensors, cameras, or robotic arms. |

| Fog Layer | The intermediary layer between edge devices and the cloud, hosting fog nodes. |

| Mist Computing | A lightweight extension of fog computing, using microcontrollers at the extreme edge. |

| Latency | The time delay between data generation and processing, minimized by fog computing. |

| OpenFog Reference Architecture | A standardized framework for designing fog computing systems, developed by the OpenFog Consortium. |

How It Fits into the RobotOps Lifecycle

The RobotOps lifecycle includes stages like design, development, deployment, monitoring, and maintenance of robotic systems. Fog computing integrates as follows:

- Design & Development: Fog nodes support simulation and testing of robotic algorithms locally, reducing dependency on cloud resources.

- Deployment: Fog computing enables over-the-air updates and configuration management for robot fleets via localized nodes.

- Monitoring: Real-time monitoring of robot performance and health data is processed at the fog layer for rapid anomaly detection.

- Maintenance: Fog nodes facilitate predictive maintenance by analyzing sensor data locally to predict failures before they occur.

Architecture & How It Works

Components

Fog computing architecture typically consists of three layers:

- Edge Layer: Comprises edge devices (e.g., robots, sensors, cameras) that generate raw data.

- Fog Layer: Includes fog nodes (routers, gateways, edge servers) that preprocess, analyze, and store data locally. These nodes are heterogeneous, supporting various protocols and devices.

- Cloud Layer: Centralized data centers for long-term storage, advanced analytics, and global orchestration.

Internal Workflow

The workflow in fog computing for RobotOps follows these steps:

- Data Collection: Edge devices (robots) collect data from sensors (e.g., LIDAR, cameras).

- Data Preprocessing: Fog nodes filter and normalize data, reducing noise and irrelevant information.

- Local Processing: Fog nodes perform real-time analytics, such as path planning or collision detection, using embedded AI models.

- Data Transmission: Processed data or summaries are sent to the cloud for long-term storage or further analysis.

- Feedback Loop: Fog nodes send control signals back to robots for real-time actions.

Architecture Diagram

Below is a textual description of the fog computing architecture diagram, as image generation is not possible:

+-------------------+ +---------------------+

| Cloud Layer | | RobotOps Control |

| (AWS, Azure IoT) | <------> | CI/CD + ML Ops |

+-------------------+ +---------------------+

^

|

(Aggregated Data)

|

+-------------------+

| Fog Layer |

| (Gateways, Edge |

| Servers, Routers)| <--> Local Storage, Security

+-------------------+

^

|

(Raw Sensor Data)

|

+-------------------+

| Robot Devices |

| (Cameras, Arms, |

| Sensors, Drones) |

+-------------------+

Integration Points with CI/CD or Cloud Tools

Fog computing integrates with RobotOps CI/CD pipelines and cloud tools as follows:

- CI/CD Integration: Fog nodes can host containerized robotic applications (e.g., using Docker or Kubernetes) for seamless deployment and updates.

- Cloud Tools: Tools like AWS IoT Greengrass or Azure IoT Edge extend fog computing capabilities by deploying cloud-managed services to edge devices.

- APIs: Fog nodes use RESTful APIs or MQTT for communication with CI/CD systems and cloud platforms, ensuring interoperability.

Installation & Getting Started

Basic Setup or Prerequisites

To set up a fog computing environment for RobotOps, you need:

- Hardware: Edge devices (e.g., Raspberry Pi, NVIDIA Jetson), fog nodes (e.g., Cisco IR1101 router or Intel NUC), and cloud access (e.g., AWS, Azure).

- Software: Docker for containerization, MQTT broker (e.g., Mosquitto), and a fog computing framework (e.g., AWS IoT Greengrass).

- Network: High-speed LAN or 5G for connectivity between edge devices, fog nodes, and the cloud.

- OS: Linux-based OS (e.g., Ubuntu) for fog nodes and edge devices.

Hands-on: Step-by-Step Beginner-Friendly Setup Guide

Below is a beginner-friendly guide to set up a fog computing environment using AWS IoT Greengrass on a Raspberry Pi as an edge device and an Intel NUC as a fog node.

- Install Dependencies on the Fog Node (Intel NUC):

- Install Ubuntu 20.04 or later.

- Install Docker:

sudo apt update

sudo apt install docker.io -y

sudo systemctl start docker

sudo systemctl enable dockerInstall AWS IoT Greengrass:

wget https://d1onfpft10uf5o.cloudfront.net/greengrass/v2/install.sh

chmod +x install.sh

sudo ./install.sh2. Configure AWS IoT Greengrass:

- Create an AWS account and navigate to the IoT Core console.

- Set up a Greengrass group and core device, following AWS documentation.

- Download and install the Greengrass core software on the Intel NUC:

sudo /greengrass/v2/bin/greengrass-cli deployment create \

--recipeDir /greengrass/v2/recipes \

--artifactDir /greengrass/v2/artifacts \

--groupId <your-group-id>3. Set Up the Edge Device (Raspberry Pi):

- Install Raspberry Pi OS (64-bit).

- Install an MQTT client:

sudo apt install mosquitto-clients -y- Connect the Raspberry Pi to the fog node via Wi-Fi or Ethernet.

4. Deploy a Sample Application:

- Create a simple Python script to simulate robot sensor data:

import paho.mqtt.client as mqtt

import time

client = mqtt.Client()

client.connect("fog-node-ip", 1883, 60)

while True:

client.publish("robot/sensor", "temperature:25C")

time.sleep(1)- Deploy the script to the Raspberry Pi and run it:

python3 sensor.py5. Monitor Data on the Fog Node:

- Use the Greengrass CLI to monitor incoming data:

sudo /greengrass/v2/bin/greengrass-cli component list6. Connect to the Cloud:

- Configure Greengrass to forward data to AWS IoT Core for long-term storage.

Real-World Use Cases

RobotOps Scenarios

- Warehouse Robotics:

- Scenario: Autonomous mobile robots (AMRs) in a warehouse use fog computing to process LIDAR and camera data for real-time navigation and collision avoidance.

- Implementation: Fog nodes preprocess sensor data to compute optimal paths, reducing latency compared to cloud-based processing.

- Industry: Logistics and e-commerce.

- Industrial Automation:

- Smart City Robotics:

- Healthcare Robotics:

- Scenario: Surgical robots use fog computing to process real-time patient data (e.g., heart rate, imaging) during operations.

- Implementation: Fog nodes ensure low-latency data processing for precise robotic movements, with critical data sent to the cloud for post-operation analysis.

- Industry: Healthcare.

Benefits & Limitations

Key Advantages

- Reduced Latency: Processing data at the edge enables millisecond-level response times, critical for robotic navigation and control.

- Bandwidth Efficiency: Local preprocessing reduces data sent to the cloud, lowering network costs.

- Offline Operation: Fog nodes enable robots to function during network outages, enhancing reliability.

- Enhanced Security: Local data processing reduces exposure to network-based attacks.

Common Challenges or Limitations

| Challenge | Description |

|---|---|

| Resource Constraints | Fog nodes have limited compute and storage compared to cloud data centers. |

| Complex Management | Distributed architecture requires sophisticated orchestration and monitoring. |

| Security Risks | Fog nodes are vulnerable to physical tampering and network attacks like IP spoofing. |

| Limited Coverage | Fog computing adoption is still emerging, limiting standardized tools and support. |

Best Practices & Recommendations

Security Tips

- Encryption: Use TLS for data transmission between edge devices, fog nodes, and the cloud.

- Access Control: Implement role-based access control (RBAC) for fog node management.

- Regular Updates: Apply security patches to fog nodes and edge devices to mitigate vulnerabilities.

Performance

- Optimize Resource Allocation: Use AI-driven algorithms (e.g., equilibrium optimizer) for efficient task scheduling on fog nodes.

- Monitor Latency: Continuously monitor fog node performance to ensure low-latency processing.

Maintenance

- Automated Monitoring: Use tools like Prometheus to monitor fog node health and performance.

- Predictive Maintenance: Leverage fog nodes for real-time analytics to predict hardware failures.

Compliance Alignment

- Ensure compliance with data privacy regulations (e.g., GDPR) by processing sensitive data locally on fog nodes.

- Use standardized frameworks like the OpenFog Reference Architecture for consistent deployments.

Automation Ideas

- Automate fog node deployment using CI/CD pipelines with tools like Jenkins or GitLab.

- Use container orchestration (e.g., Kubernetes) for scalable fog node management.

Comparison with Alternatives

Comparison Table

| Feature | Fog Computing | Edge Computing | Cloud Computing |

|---|---|---|---|

| Processing Location | Near edge devices | On edge devices | Centralized data centers |

| Latency | Low | Very low | High |

| Scalability | High | Moderate | Very high |

| Offline Capability | Yes | Yes | No |

| Use Case | RobotOps, smart cities | Autonomous robots | Big data analytics |

When to Choose Fog Computing

- Choose Fog Computing: When low latency and distributed processing are needed, such as in RobotOps for real-time robotic control and fleet management.

- Choose Edge Computing: For resource-constrained environments where processing must occur directly on the robot.

- Choose Cloud Computing: For large-scale analytics or long-term data storage, not suitable for real-time robotic tasks.

Conclusion

Fog computing is a transformative paradigm for RobotOps, enabling low-latency, scalable, and resilient robotic systems. By bridging the gap between edge devices and the cloud, it supports real-time processing critical for applications like warehouse robotics, industrial automation, and smart cities. Despite challenges like resource constraints and complex management, adopting best practices like encryption, automated monitoring, and standardized architectures can maximize its benefits.

Future Trends

- Edge AI Integration: Combining fog computing with AI models for advanced robotic decision-making.

- 5G Synergy: Leveraging 5G networks for faster communication between fog nodes and edge devices.

- Standardization: Increased adoption of frameworks like the OpenFog Reference Architecture for interoperability.

Next Steps

- Explore hands-on projects with AWS IoT Greengrass or Azure IoT Edge.

- Join communities like the Industry IoT Consortium (IIC) for resources and collaboration.