Introduction & Overview

Edge computing is a distributed computing paradigm that brings computation and data storage closer to the source of data generation, reducing latency and optimizing bandwidth. In the context of RobotOps (Robotic Operations), which focuses on the development, deployment, and management of robotic systems, edge computing plays a pivotal role in enabling real-time decision-making, enhancing autonomy, and improving operational efficiency. This tutorial provides a detailed exploration of edge computing tailored to RobotOps, covering its core concepts, architecture, practical setup, use cases, benefits, limitations, best practices, and comparisons with alternatives.

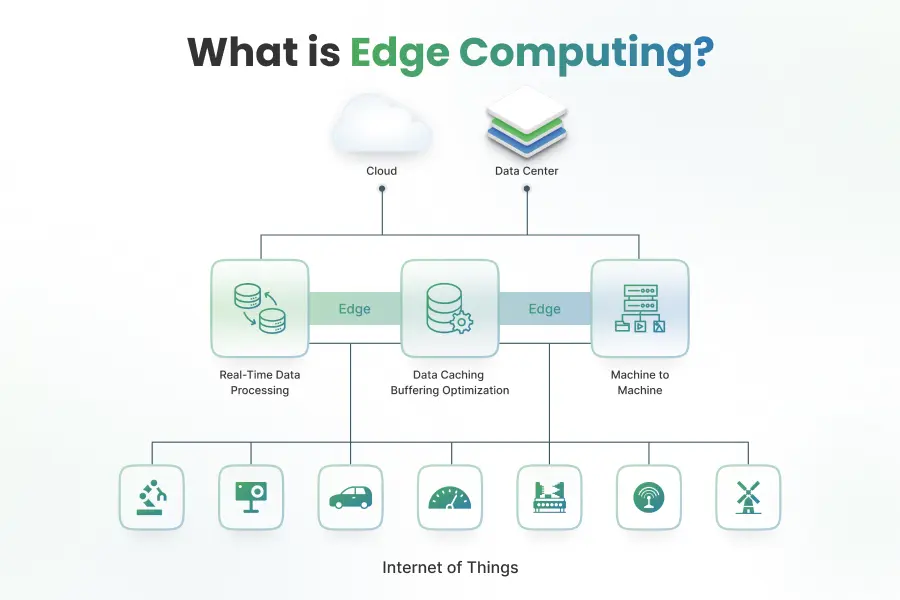

What is Edge Computing?

Edge computing involves processing data at or near the location where it is generated, rather than relying on centralized cloud servers. This approach minimizes latency, reduces bandwidth usage, and enhances data privacy by keeping sensitive information local. In RobotOps, edge computing enables robots to process sensor data, make autonomous decisions, and interact with their environment in real time.

History or Background

Edge computing evolved as a response to the limitations of traditional cloud computing, particularly in handling the massive data generated by Internet of Things (IoT) devices and robotic systems. Below is a brief history of edge computing:

- Early 2000s: The concept of edge computing emerged with content delivery networks (CDNs), which cached data closer to users to reduce latency for web content delivery.

- 2010s: The rise of IoT and mobile devices highlighted the need for localized data processing. Technologies like fog computing and mobile edge computing (MEC) began to take shape, focusing on decentralizing computation.

- 2015 onwards: The advent of 5G technology and the proliferation of IoT devices accelerated edge computing adoption. Standards bodies like the Linux Foundation’s LF Edge and ETSI’s MEC group formalized edge computing frameworks.

- 2020s: Edge computing became integral to industries like robotics, autonomous vehicles, and smart cities, driven by advancements in AI, machine learning, and lightweight virtualization.

In RobotOps, edge computing gained traction as robotic systems required low-latency processing for tasks like navigation, obstacle avoidance, and real-time analytics, which centralized cloud solutions could not efficiently support.

Why is it Relevant in RobotOps?

RobotOps encompasses the lifecycle of robotic systems, including design, development, deployment, and maintenance. Edge computing is critical in this context because:

- Real-Time Processing: Robots often operate in dynamic environments requiring immediate responses (e.g., autonomous navigation in warehouses).

- Bandwidth Efficiency: Robots generate vast amounts of sensor data (e.g., LIDAR, cameras), and transmitting all data to the cloud is impractical.

- Autonomy: Edge computing enables robots to make decisions locally, reducing dependency on constant cloud connectivity.

- Security and Privacy: Local data processing minimizes the risk of data breaches during transmission, crucial for sensitive robotic applications like healthcare or defense.

Core Concepts & Terminology

Key Terms and Definitions

- Edge Device: A computing device (e.g., gateway, router, or robot) that processes data locally at the network’s edge.

- Edge Node: A localized data center or server that handles computation and storage near the data source.

- Fog Computing: An extension of edge computing that includes intermediate layers between edge devices and the cloud for additional processing.

- Mobile Edge Computing (MEC): Edge computing tailored for mobile networks, leveraging 5G for low-latency applications.

- Latency: The time delay between data generation and processing, minimized by edge computing.

- IoT (Internet of Things): The network of connected devices that generate data, often processed at the edge in RobotOps.

| Term | Definition | Example in RobotOps |

|---|---|---|

| Edge Node | Device/server where computation is performed locally. | Onboard robot GPU, factory server |

| Latency | Time delay in processing data. | Millisecond response for obstacle avoidance |

| Fog Computing | Intermediate layer between edge and cloud. | Local gateway aggregating multiple robot feeds |

| Inference at Edge | Running AI/ML models directly on robots. | Edge AI chip detecting defective products |

| Digital Twin | Virtual replica of robots, synced via edge + cloud. | Remote monitoring of warehouse robots |

How It Fits into the RobotOps Lifecycle

Edge computing integrates into the RobotOps lifecycle as follows:

- Design and Development: Engineers design robotic systems with edge-capable hardware (e.g., NVIDIA Jetson, Raspberry Pi) and software frameworks (e.g., ROS – Robot Operating System).

- Deployment: Edge nodes are deployed to manage robot fleets, process sensor data, and execute control algorithms locally.

- Monitoring and Maintenance: Edge computing enables real-time monitoring of robot health and performance, reducing reliance on cloud-based analytics.

- Updates: Over-the-air (OTA) updates for robotic software can be managed through edge nodes, ensuring scalability and minimal downtime.

Architecture & How It Works

Components

Edge computing architecture in RobotOps typically includes:

- Edge Devices: Robots or IoT sensors (e.g., cameras, LIDAR) that generate data.

- Edge Gateway: A local server or gateway that aggregates and processes data from multiple devices (e.g., Intel NUC, Raspberry Pi clusters).

- Edge Node: A small-scale data center for computation-intensive tasks like machine learning inference.

- Cloud Layer: Centralized servers for long-term storage, training AI models, or handling non-time-critical tasks.

- Communication Protocols: Lightweight protocols like MQTT or CoAP for efficient data exchange between edge devices and nodes.

Internal Workflow

- Data Generation: Robots generate data via sensors (e.g., temperature, video, GPS).

- Local Processing: Edge devices or gateways filter, aggregate, or analyze data using embedded AI models or rule-based systems.

- Decision-Making: Processed data triggers real-time actions (e.g., navigation, object detection).

- Data Offloading: Non-critical or aggregated data is sent to the cloud for storage or further analysis.

- Feedback Loop: Cloud-based insights (e.g., updated AI models) are sent back to edge devices for improved performance.

Architecture Diagram

Below is a textual description of an edge computing architecture diagram for RobotOps:

+-----------------------+ +------------------------+

| Robot Sensors | | Robot Sensors |

| (Cameras, LiDAR, etc) | | (Telemetry, Actuators) |

+----------+------------+ +-----------+------------+

| |

v v

+----------------+ +----------------+

| Edge Device | <---------> | Edge Gateway |

| (AI Inference, | Local Net | (Aggregation, |

| Control Logic) | | Preprocessing) |

+-------+--------+ +--------+-------+

| |

v v

+--------------------------------+

| Cloud Platform |

| (Analytics, Training, Storage) |

+--------------------------------+

^

|

+---------------+

| CI/CD Pipeline|

| & Monitoring |

+---------------+

Explanation: The diagram shows a three-tier architecture. Robots in the physical environment generate data processed by edge devices. Edge nodes perform real-time analytics and decision-making, while the cloud handles heavy computation and storage. Communication between layers uses protocols like MQTT for efficiency.

Integration Points with CI/CD or Cloud Tools

- CI/CD Integration: Edge computing in RobotOps integrates with CI/CD pipelines via tools like Jenkins or GitLab CI to automate software updates for edge devices. For example, Docker containers can be used to deploy ROS nodes to edge gateways.

- Cloud Tools: Platforms like AWS IoT Greengrass or Azure IoT Edge enable seamless integration between edge and cloud, supporting tasks like model deployment and remote monitoring.

- Example: AWS IoT Greengrass can deploy Lambda functions to edge nodes, allowing robots to run machine learning models locally while syncing with the cloud for updates.

Installation & Getting Started

Basic Setup or Prerequisites

To set up edge computing for RobotOps, you need:

- Hardware: Edge-capable devices like NVIDIA Jetson Nano, Raspberry Pi 4, or Intel NUC.

- Software: ROS (Robot Operating System), Docker, and an edge computing framework (e.g., AWS IoT Greengrass, Eclipse Kura).

- Network: A low-latency network (Wi-Fi, 5G) for device communication.

- Operating System: Ubuntu 20.04 or later for compatibility with ROS and edge frameworks.

- Dependencies: Python 3, MQTT libraries (e.g., Paho-MQTT), and container orchestration tools like Kubernetes (optional).

Hands-On: Step-by-Step Beginner-Friendly Setup Guide

This guide sets up a basic edge computing environment using ROS and AWS IoT Greengrass on a Raspberry Pi.

- Install Ubuntu on Raspberry Pi:

- Download Ubuntu 20.04 Server for Raspberry Pi from the official website.

- Flash the image to an SD card using a tool like Balena Etcher.

- Boot the Raspberry Pi and configure SSH access.

- Install ROS Noetic:

sudo sh -c 'echo "deb http://packages.ros.org/ros/ubuntu focal main" > /etc/apt/sources.list.d/ros-latest.list'

sudo apt-key adv --keyserver 'hkp://keyserver.ubuntu.com:80' --recv-key C1CF6E31E6BADE8868B172B4F42ED6FBAB17C654

sudo apt update

sudo apt install ros-noetic-ros-base

echo "source /opt/ros/noetic/setup.bash" >> ~/.bashrc

source ~/.bashrc3. Install AWS IoT Greengrass:

- Sign up for an AWS account and configure the AWS CLI.

- Install Greengrass Core:

sudo apt install openjdk-11-jdk

wget https://d2s8p88vqu9w66.cloudfront.net/releases/greengrass-nucleus-latest.zip

unzip greengrass-nucleus-latest.zip -d GreengrassCore- Configure Greengrass with your AWS credentials and IoT Thing certificate (follow AWS documentation for certificate setup).

4. Set Up MQTT Communication:

- Install the Paho-MQTT library:

pip install paho-mqtt- Create a simple ROS node to publish sensor data to an MQTT topic:

import rospy

import paho.mqtt.client as mqtt

from std_msgs.msg import String

def sensor_callback(data):

client = mqtt.Client()

client.connect("localhost", 1883, 60)

client.publish("robot/sensor", data.data)

client.disconnect()

rospy.init_node("sensor_publisher", anonymous=True)

rospy.Subscriber("/sensor_data", String, sensor_callback)

rospy.spin()5. Deploy a Sample Application:

- Use AWS IoT Greengrass to deploy a Lambda function that processes sensor data locally.

- Test the setup by simulating sensor data using a ROS publisher.

6. Verify Setup:

- Ensure the Raspberry Pi processes data locally and sends aggregated results to the cloud.

Real-World Use Cases

Edge computing is transformative in RobotOps. Below are four real-world scenarios:

- Warehouse Automation:

- Scenario: Autonomous Guided Vehicles (AGVs) in a warehouse use edge computing to process LIDAR and camera data for navigation and obstacle avoidance.

- Implementation: Edge nodes running ROS and TensorFlow Lite perform real-time path planning, reducing latency compared to cloud-based processing.

- Industry: Logistics (e.g., Amazon warehouses).

- Healthcare Robotics:

- Scenario: Surgical robots process patient data locally to assist surgeons in real time, ensuring compliance with HIPAA privacy standards.

- Implementation: Edge devices use AI models for image recognition and motion control, with only anonymized data sent to the cloud.

- Industry: Healthcare (e.g., Intuitive Surgical’s da Vinci system).

- Smart City Robotics:

- Scenario: Drones monitor traffic in smart cities, analyzing video feeds at the edge to optimize signal timings and reduce congestion.

- Implementation: Edge nodes with 5G connectivity process video streams using computer vision algorithms.

- Industry: Urban Infrastructure (e.g., Singapore’s Smart Nation initiative).

- Industrial Maintenance:

- Scenario: Robots in manufacturing plants perform predictive maintenance by analyzing sensor data from machinery.

- Implementation: Edge gateways use machine learning to detect anomalies, triggering maintenance alerts without cloud dependency.

- Industry: Manufacturing (e.g., Siemens factories).

Benefits & Limitations

Key Advantages

| Benefit | Description |

|---|---|

| Reduced Latency | Processes data locally, enabling real-time decisions critical for robotics (e.g., autonomous navigation). |

| Bandwidth Optimization | Filters data at the edge, reducing network congestion and costs. |

| Enhanced Security | Keeps sensitive data local, minimizing exposure during transmission. |

| Scalability | Supports large fleets of robots without overloading central servers. |

| Autonomy | Enables robots to operate independently in disconnected environments. |

Common Challenges or Limitations

| Limitation | Description |

|---|---|

| Resource Constraints | Edge devices have limited compute and storage compared to cloud servers. |

| Complexity | Managing distributed edge nodes increases operational complexity. |

| Security Risks | Decentralized architecture requires robust device-level security (e.g., encryption, MFA). |

| Interoperability | Integrating diverse edge devices and protocols can be challenging. |

Best Practices & Recommendations

Security Tips

- Data Encryption: Use AES for data at rest and TLS for data in transit.

- Authentication: Implement multi-factor authentication (MFA) and role-based access control (RBAC) for edge devices.

- Zero Trust Model: Assume all devices are potential threats and verify each access request.

Performance

- Optimize AI Models: Use lightweight models like TensorFlow Lite or ONNX for edge inference.

- Network Efficiency: Use MQTT or CoAP for low-bandwidth, reliable communication.

- Containerization: Deploy applications using Docker to ensure portability and scalability.

Maintenance

- OTA Updates: Use CI/CD pipelines to automate software updates on edge devices.

- Monitoring: Implement tools like Prometheus for real-time monitoring of edge node health.

Compliance Alignment

- Ensure compliance with standards like GDPR (for data privacy) and HIPAA (for healthcare robotics) by processing sensitive data locally.

Automation Ideas

- Automate deployment with Kubernetes or AWS IoT Greengrass for seamless scaling.

- Use Ansible or Terraform to manage edge node configurations.

Comparison with Alternatives

| Feature | Edge Computing | Cloud Computing | Fog Computing |

|---|---|---|---|

| Latency | Low (real-time) | High | Medium |

| Bandwidth Usage | Low (local processing) | High (data transfer to cloud) | Medium |

| Scalability | High (distributed nodes) | High (centralized servers) | High (hybrid) |

| Security | Enhanced (local data) | Moderate (data in transit) | Moderate |

| Use Case | Real-time robotics, IoT | Big data analytics, storage | Hybrid IoT applications |

When to Choose Edge Computing

- Choose Edge Computing: For time-critical RobotOps tasks like autonomous navigation, real-time analytics, or privacy-sensitive applications.

- Choose Alternatives: Use cloud computing for non-real-time tasks like AI model training or long-term data storage. Fog computing is suitable for hybrid scenarios requiring both edge and cloud capabilities.

Conclusion

Edge computing is a game-changer for RobotOps, enabling real-time decision-making, autonomy, and efficiency in robotic systems. By processing data locally, it addresses the latency, bandwidth, and security challenges of traditional cloud computing. This tutorial covered its architecture, setup, use cases, and best practices, providing a solid foundation for implementing edge computing in RobotOps.

Future Trends

- 5G Integration: Enhanced connectivity will boost edge computing adoption in robotics.

- AI at the Edge: Advances in edge AI (e.g., NVIDIA Run:AI, TensorFlow Lite) will enable more sophisticated robotic applications.

- Standardization: Efforts by LF Edge and ETSI will drive interoperable edge frameworks.

Next Steps

- Explore hands-on projects with ROS and edge platforms like AWS IoT Greengrass.

- Join communities like LF Edge (https://www.lfedge.org/) or ROS Discourse (https://discourse.ros.org/).

- Refer to official documentation:

- AWS IoT Greengrass: https://docs.aws.amazon.com/greengrass/

- ROS: http://wiki.ros.org/

- Eclipse Kura: https://www.eclipse.org/kura/