Introduction & Overview

Camera modules are pivotal in modern robotics, enabling machines to perceive, interpret, and interact with their environments through visual data. In the context of Robot Operating Systems (ROS), often referred to as “RobotOps” for its operational framework, camera modules serve as the “eyes” of robots, facilitating tasks like navigation, object detection, and human-robot interaction. This tutorial provides an in-depth exploration of camera modules within ROS, covering their history, architecture, integration, use cases, and best practices for technical practitioners.

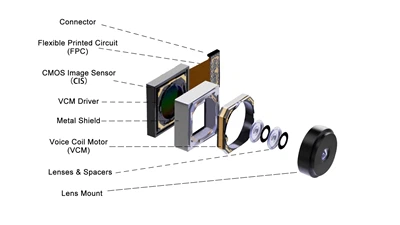

What is a Camera Module?

A camera module is a compact hardware component that integrates an image sensor (e.g., CMOS or CCD), lens, and supporting electronics to capture visual data in the form of images or videos. In ROS, camera modules are interfaced via software nodes to process visual inputs for robotic applications, such as autonomous navigation, object recognition, and quality inspection.

History or Background

The evolution of camera modules in robotics is tied to advancements in imaging technology and robotics frameworks:

- 1930s–1980s: Early camera modules, like those from Robot (a German imaging company founded in 1934), used clockwork mechanisms and 35mm film for basic imaging. These were not integrated with robotics but laid the groundwork for compact imaging systems.

- 1990s: The advent of CCD and CMOS sensors revolutionized camera modules, enabling digital imaging. Early robotic vision systems used bulky cameras with limited processing capabilities.

- 2000s: The introduction of ROS in 2007 by Willow Garage standardized robot software development, integrating camera modules with middleware for real-time data processing. Affordable USB and MIPI cameras, like the Raspberry Pi Camera Module, democratized robotic vision.

- 2010s–Present: Advances in AI and machine learning, particularly convolutional neural networks (CNNs), enhanced camera modules’ capabilities for object recognition and 3D perception. Companies like e-con Systems and Leopard Imaging developed high-performance camera modules tailored for robotics, supporting interfaces like USB3 and GMSL2.

Why is it Relevant in RobotOps?

In ROS-based RobotOps, camera modules are critical for:

- Perception: Enabling robots to understand their environment through visual data.

- Autonomy: Supporting navigation, obstacle avoidance, and localization via techniques like SLAM (Simultaneous Localization and Mapping).

- Interaction: Facilitating human-robot collaboration through gesture and facial recognition.

- Automation: Streamlining industrial processes like quality inspection and material handling.

Camera modules integrate seamlessly with ROS’s modular architecture, allowing developers to build scalable, vision-driven robotic systems.

Core Concepts & Terminology

Key Terms and Definitions

| Term | Definition |

|---|---|

| Camera Module | A hardware unit with an image sensor, lens, and electronics for capturing images/videos. |

| ROS Node | A process in ROS that performs computation, e.g., a camera driver publishing image data. |

| Image Sensor | A device (e.g., CMOS, CCD) that converts light into digital signals. |

| SLAM | Simultaneous Localization and Mapping, a technique for building maps and localizing robots. |

| Stereo Vision | Using two cameras to estimate depth via triangulation, common in 3D perception. |

| Topic | A named bus in ROS for publishing/subscribing to messages, e.g., /camera/image_raw. |

| Sensor Fusion | Combining camera data with other sensors (e.g., LiDAR) for enhanced perception. |

How It Fits into the RobotOps Lifecycle

The ROS lifecycle includes development, deployment, and operation of robotic systems. Camera modules contribute as follows:

- Development: Camera drivers are developed as ROS nodes to capture and publish image data.

- Integration: Camera data is processed using ROS packages (e.g.,

image_pipeline) for tasks like image rectification or object detection. - Deployment: Cameras are calibrated and integrated with robotic hardware, ensuring compatibility with ROS middleware.

- Operation: Real-time image processing supports tasks like navigation or quality control, with monitoring via ROS tools like

rqt.

Architecture & How It Works

Components

A camera module in ROS consists of:

- Hardware: Image sensor (CMOS/CCD), lens, and interface (USB, MIPI, GMSL2).

- Driver: Software to interface the camera with ROS, publishing images to topics like

/camera/image_raw. - ROS Nodes: Nodes for image processing, e.g.,

image_procfor rectification orvision_opencvfor feature detection. - Processing Unit: A computer (e.g., Raspberry Pi, NVIDIA Jetson) for running ROS nodes and AI algorithms.

Internal Workflow

- Image Capture: The camera module captures raw images or videos.

- Data Transmission: Images are sent via interfaces like USB3 or GMSL2 to the processing unit.

- ROS Node Publication: A camera driver node publishes images to a ROS topic.

- Processing: Other nodes subscribe to the topic, performing tasks like object detection or depth estimation.

- Action: Processed data informs robot actions, e.g., adjusting navigation paths.

Architecture Diagram Description

The architecture diagram illustrates the flow of data in a ROS-based camera module system:

- Camera Module: Connected via USB/MIPI to a processing unit (e.g., NVIDIA Jetson).

- ROS Driver Node: Publishes raw images to

/camera/image_raw. - Processing Nodes: Subscribe to

/camera/image_raw, performing tasks like rectification (image_proc), object detection (vision_opencv), or SLAM (rtabmap_ros). - Action Nodes: Use processed data to control actuators (e.g.,

/cmd_velfor navigation). - Visualization: Tools like

rqt_image_viewdisplay images for debugging. - Connections: Arrows indicate data flow via ROS topics, with a master node coordinating communication.

[ Camera Module ] → [ Image Preprocessing Unit ] → [ AI/ML Model ]

| | |

↓ ↓ ↓

[ Local Processing ] [ ROS Middleware ] → [ Robot Control System ]

| | |

↓ ↓ ↓

[ CI/CD Pipeline ] [ Cloud Vision AI ] [ Monitoring Dashboard ]

Integration Points with CI/CD or Cloud Tools

- CI/CD: Camera module integration can be automated using ROS build tools like

catkinorcolcon. Docker containers package camera drivers and dependencies for consistent deployment. - Cloud Tools: ROS2 supports cloud integration via DDS (Data Distribution Service). Camera data can be streamed to cloud platforms like AWS RoboMaker for remote processing or monitoring.

Installation & Getting Started

Basic Setup or Prerequisites

- Hardware: Raspberry Pi 4 or NVIDIA Jetson, camera module (e.g., Raspberry Pi Camera V2 or USB webcam), and a robot platform.

- Software: Ubuntu 20.04/22.04, ROS Noetic/Melodic (ROS1) or ROS2 Humble, camera drivers (e.g.,

uvc_camera,rpi_camera). - Dependencies: Install

ros-<distro>-image-pipeline,ros-<distro>-vision-opencv.

Hands-on: Step-by-Step Beginner-Friendly Setup Guide

- Install ROS:

sudo apt update

sudo apt install ros-noetic-desktop-full

source /opt/ros/noetic/setup.bash2. Set Up Workspace:

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws

catkin_make

source devel/setup.bash3. Install Camera Drivers (e.g., for a USB webcam):

sudo apt install ros-noetic-usb-cam4. Launch Camera Node:

roscore &

rosrun usb_cam usb_cam_node5. Visualize Images:

rosrun rqt_image_view rqt_image_view6. Test with a Subscriber Node:

#!/usr/bin/env python

import rospy

from sensor_msgs.msg import Image

def callback(data):

rospy.loginfo("Received image")

rospy.init_node('image_subscriber', anonymous=True)

rospy.Subscriber('/usb_cam/image_raw', Image, callback)

rospy.spin() Save as image_subscriber.py, make executable, and run:

chmod +x image_subscriber.py

rosrun your_package image_subscriber.pyReal-World Use Cases

- Warehouse Automation:

- Quality Inspection:

- Human-Robot Interaction:

- Agricultural Robotics:

Benefits & Limitations

Key Advantages

- Enhanced Perception: Enables robots to interpret complex environments.

- Modularity: Integrates seamlessly with ROS’s node-based architecture.

- Cost-Effectiveness: Affordable options like Raspberry Pi Camera V2 lower entry barriers.

- Flexibility: Supports diverse applications, from navigation to inspection.

Common Challenges or Limitations

- Processing Overhead: High-resolution images require significant computational resources.

- Calibration Complexity: Stereo cameras need precise calibration for accurate depth estimation.

- Lighting Sensitivity: Performance degrades in poor lighting conditions.

- Cost of Advanced Modules: 3D cameras (e.g., depth cameras) are expensive compared to 2D cameras.

Best Practices & Recommendations

Security Tips

- Secure Communication: Use ROS2 with DDS security plugins to encrypt camera data streams.

- Firmware Updates: Regularly update camera firmware to patch vulnerabilities.

Performance

- Optimize Resolution: Use lower resolutions for real-time tasks to reduce latency.

- Edge Processing: Leverage edge devices like NVIDIA Jetson for faster image processing.

Maintenance

- Regular Calibration: Recalibrate cameras periodically to maintain accuracy.

- Clean Lenses: Ensure lenses are free of dust and smudges for clear images.

Compliance Alignment

- Data Privacy: Comply with GDPR or CCPA when capturing human images, especially in public spaces.

- Safety Standards: Adhere to ISO 10218 for safe human-robot interaction.

Automation Ideas

- Automate camera calibration using ROS packages like

camera_calibration. - Use CI/CD pipelines to deploy updated camera drivers across robot fleets.

Comparison with Alternatives

| Feature | Camera Module (ROS) | LiDAR | Ultrasonic Sensors |

|---|---|---|---|

| Data Type | Visual (2D/3D) | 3D Point Cloud | Distance (1D) |

| Cost | Low–High | High | Low |

| Use Case | Object detection, navigation | Precise mapping | Basic obstacle detection |

| Environment | Versatile | Indoor/Outdoor | Limited range |

| Processing Needs | High | Moderate | Low |

When to Choose Camera Module

- Choose Camera Module: For vision-based tasks like object recognition, human interaction, or low-cost solutions.

- Choose Alternatives: Use LiDAR for high-precision mapping in low-light conditions or ultrasonic sensors for simple, low-cost distance sensing.

Conclusion

Camera modules are indispensable in ROS-based RobotOps, enabling robots to perceive and interact with their environments. Their integration with ROS’s modular architecture supports a wide range of applications, from warehouse automation to healthcare. While challenges like processing overhead and calibration complexity exist, best practices in optimization and maintenance can mitigate these issues. As AI and imaging technologies advance, camera modules will continue to evolve, supporting more sophisticated robotic applications.

Future Trends

- AI Integration: Enhanced object detection with generative AI and LLMs.

- Edge Computing: Increased use of edge devices for real-time processing.

- Multispectral Imaging: Growing adoption in agriculture and environmental monitoring.

Next Steps

- Explore ROS camera packages at ros.org.

- Join the ROS community on discourse.ros.org for support and updates.

- Experiment with camera modules using affordable hardware like Raspberry Pi.