Introduction & Overview

LiDAR (Light Detection and Ranging) is a transformative remote sensing technology that uses laser pulses to measure distances and generate precise, high-resolution 3D models of environments. In the context of RobotOps (Robotics Operations), LiDAR is a cornerstone for enabling robots to perceive, navigate, and interact with their surroundings autonomously. This tutorial provides a detailed exploration of LiDAR’s role in RobotOps, covering its history, architecture, integration, practical setup, use cases, benefits, limitations, best practices, and comparisons with alternatives.

What is LiDAR?

LiDAR is a remote sensing method that emits laser pulses and measures the time-of-flight (ToF) or phase shift of the reflected signals to calculate distances. By scanning the environment, LiDAR creates a 3D point cloud, a digital representation of the surroundings, which is critical for robotic navigation, mapping, and object detection.

- Core Principle: LiDAR calculates distance using the formula:

[d = \frac{c \cdot t}{2}]

where (d) is the distance, (c) is the speed of light, and (t) is the time-of-flight. - Types: Includes airborne, terrestrial, and mobile LiDAR, each suited for specific applications.

History or Background

LiDAR’s origins trace back to the 1960s, with early applications in meteorology for studying atmospheric particles. The technology gained prominence in the 1970s when NASA used it to map the moon’s topography during the Apollo 15 mission in 1971. The 1990s marked a significant milestone with the integration of GPS, enabling precise georeferenced 3D models. Advancements in laser technology, computing power, and sensor miniaturization in the 2000s made LiDAR compact and affordable, paving its way into robotics and autonomous systems.

- Key Milestones:

- 1961: Hughes Aircraft Company develops the first LiDAR prototype.

- 1971: NASA employs LiDAR for lunar mapping.

- 1990s: GPS integration enhances LiDAR’s geospatial accuracy.

- 2005: LiDAR becomes pivotal in DARPA Grand Challenge, enabling Stanley, the first autonomous vehicle to complete the race.

- 2020s: Solid-state LiDAR and AI-driven data processing reduce costs and improve performance.

Why is it Relevant in RobotOps?

RobotOps focuses on the deployment, management, and operation of robotic systems, akin to DevOps for software. LiDAR is critical in RobotOps because it provides robots—with high-fidelity environmental data, enabling safe navigation, precise mapping, and real-time decision-making. It integrates seamlessly with Robot Operating System (ROS), CI/CD pipelines, and cloud platforms for continuous monitoring and updates, ensuring robots operate efficiently in dynamic environments.

- Relevance:

- Enables autonomous navigation and obstacle avoidance.

- Supports real-time environment mapping via SLAM (Simultaneous Localization and Mapping).

- Enhances scalability in robotic fleets through cloud-based data processing.

- Facilitates continuous integration and testing of robotic perception systems.

Core Concepts & Terminology

Key Terms and Definitions

| Term | Definition |

|---|---|

| LiDAR | Light Detection and Ranging, a technology using laser pulses to measure distances and create 3D models. |

| Point Cloud | A set of data points in 3D space representing the environment, generated by LiDAR scans. |

| Time-of-Flight (ToF) | The time taken for a laser pulse to travel to an object and return, used to calculate distance. |

| SLAM | Simultaneous Localization and Mapping, an algorithm that uses LiDAR data to map an environment while tracking the robot’s position. |

| Field of View (FoV) | The spatial extent a LiDAR sensor can capture in a single scan, typically measured in degrees. |

| Solid-State LiDAR | A compact, non-mechanical LiDAR using fixed or MEMS-based scanning mechanisms. |

How It Fits into the RobotOps Lifecycle

LiDAR integrates into the RobotOps lifecycle across planning, development, deployment, and monitoring phases:

- Planning: Define sensor requirements based on environment and tasks (e.g., indoor vs. outdoor).

- Development: Integrate LiDAR with ROS for SLAM and navigation algorithms.

- Deployment: Use CI/CD pipelines to deploy updated perception models to robotic fleets.

- Monitoring: Continuously analyze LiDAR data in the cloud to detect anomalies and optimize performance.

Architecture & How It Works

Components

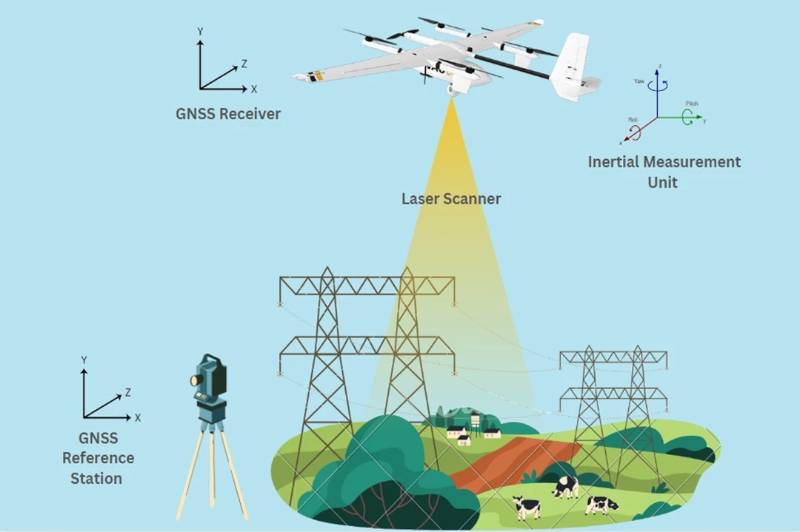

A LiDAR system comprises three primary components:

- Laser: Emits rapid, high-frequency light pulses (typically near-infrared or 532 nm for bathymetric applications).

- Scanner: Directs laser pulses across the environment, often using rotating mirrors or MEMS.

- Receiver: Detects reflected pulses using photodetectors (e.g., avalanche photodiodes or single-photon detectors).

Additional components include GPS for georeferencing, IMUs for orientation, and signal processing units for data interpretation.

Internal Workflow

- Pulse Emission: The laser emits pulses toward the environment.

- Reflection: Pulses reflect off objects and return to the receiver.

- Time Measurement: The receiver calculates ToF or phase shift.

- Data Processing: Algorithms convert raw data into a 3D point cloud, filtering noise and outliers.

- Integration: The point cloud is used by SLAM or other algorithms for navigation and mapping.

Architecture Diagram

Below is a textual description of a LiDAR system architecture integrated into a RobotOps pipeline:

┌──────────────────┐

│ LiDAR Sensor │

└───────┬──────────┘

│ Laser pulses

▼

┌─────────────────────┐

│ Onboard Processor │

│ (Point Cloud Gen.) │

└─────────┬───────────┘

│

▼

┌──────────────────────┐

│ SLAM & Perception │

│ (Mapping, Obstacles) │

└──────────┬───────────┘

│

┌────────┴─────────┐

▼ ▼

┌──────────────────┐ ┌──────────────────┐

│ RobotOps Layer │ │ Monitoring Tools │

│ (ROS, APIs, CI/CD)│ │ (Prometheus etc.)│

└──────────┬────────┘ └─────────┬────────┘

│ Cloud Sync │ Alerts/Logs

▼ ▼

┌─────────────────────────────────┐

│ Cloud Analytics & DevOps CI/CD │

│ (AWS, GCP, Azure, Jenkins etc.)│

└─────────────────────────────────┘

- LiDAR Sensor: Captures raw environmental data.

- ROS Node: Processes LiDAR data using ROS libraries (e.g.,

sensor_msgs/LaserScan). - SLAM Algorithm: Generates maps and localizes the robot.

- CI/CD Pipeline: Deploys updated models and firmware.

- Cloud Platform: Stores and analyzes point clouds for fleet management.

- Edge Device: Executes real-time navigation and control.

Integration Points with CI/CD or Cloud Tools

- CI/CD: Use Jenkins or GitLab CI to automate testing and deployment of LiDAR-based perception models. For example, unit tests validate SLAM accuracy, while integration tests simulate real-world environments.

- Cloud Tools: AWS RoboMaker or Google Cloud IoT Core processes LiDAR data for real-time analytics, enabling fleet monitoring and predictive maintenance.

- ROS Integration: LiDAR data is published to ROS topics (

/scanfor 2D,/pointcloudfor 3D), facilitating seamless integration with navigation stacks.

Installation & Getting Started

Basic Setup or Prerequisites

- Hardware:

- LiDAR sensor (e.g., RPLIDAR A1, Velodyne Puck, or Ouster OS1).

- Computing platform (e.g., Raspberry Pi, NVIDIA Jetson Nano).

- Power supply (5V for USB-powered LiDAR, 12/24V for others).

- Software:

- Ubuntu 20.04 or later.

- ROS Noetic or ROS 2 Humble.

- LiDAR driver package (specific to the sensor model).

- Tools: Git, Python 3, RViz for visualization.

Hands-On: Step-by-Step Beginner-Friendly Setup Guide

This guide sets up an RPLIDAR A1 with ROS Noetic on Ubuntu 20.04.

- Install Ubuntu and ROS:

- Download and install Ubuntu 20.04.

- Install ROS Noetic:

sudo sh -c 'echo "deb http://packages.ros.org/ros/ubuntu focal main" > /etc/apt/sources.list.d/ros-latest.list'

sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-key C1CF6E31E6BADE8868B172B4F42ED6FBAB17C654

sudo apt update

sudo apt install ros-noetic-desktop-full

echo "source /opt/ros/noetic/setup.bash" >> ~/.bashrc

source ~/.bashrc2. Connect the LiDAR:

- Plug the RPLIDAR A1 into a USB port.

- Check device connection:

ls /dev/ttyUSB*- Set permissions:

sudo chmod 666 /dev/ttyUSB03. Install RPLIDAR ROS Package:

- Create a ROS workspace:

mkdir -p ~/catkin_ws/src cd ~/catkin_ws/src git clone https://github.com/Slamtec/rplidar_ros.git- Build the workspace:

cd ~/catkin_ws catkin_make source devel/setup.bash4. Launch the LiDAR Node:

- Start ROS core:

roscore- In a new terminal, launch the RPLIDAR node:

roslaunch rplidar_ros rplidar.launch5. Visualize Data in RViz:

- Open RViz:

rosrun rviz rviz- Add a

LaserScantopic, set to/scan, and configure the fixed frame tolaser.

6. Test the Setup:

- Verify data streaming:

rostopic echo /scan- Observe the 2D point cloud in RViz.

Real-World Use Cases

- Autonomous Warehouse Robots:

- Scenario: AGVs (Automated Guided Vehicles) in Amazon warehouses use LiDAR for navigation and obstacle avoidance.

- Implementation: LiDAR generates 3D point clouds for SLAM, enabling robots to map warehouse layouts and avoid dynamic obstacles like workers or pallets.

- Industry: Logistics.

- Precision Agriculture:

- Scenario: John Deere’s autonomous tractors use LiDAR to monitor crop health and optimize planting.

- Implementation: Airborne LiDAR maps terrain and vegetation, while ground-based LiDAR tracks growth patterns, improving yield predictions.

- Industry: Agriculture.

- Construction Site Inspection:

- Scenario: Boston Dynamics’ SPOT robot uses LiDAR to inspect construction sites for structural integrity.

- Implementation: LiDAR scans generate 3D models of buildings, identifying defects or misalignments.

- Industry: Construction.

- Self-Driving Vehicles:

- Scenario: Waymo’s autonomous cars rely on LiDAR for real-time navigation and pedestrian detection.

- Implementation: High-resolution LiDAR point clouds enable precise obstacle detection, even in adverse weather.

- Industry: Automotive.

Benefits & Limitations

Key Advantages

- High Accuracy: Provides centimeter-level precision for 3D mapping.

- Lighting Independence: Operates effectively in low-light or dark environments.

- Real-Time Processing: Enables rapid decision-making for navigation and obstacle avoidance.

- Versatility: Applicable across industries like agriculture, logistics, and automotive.

Common Challenges or Limitations

| Challenge | Description |

|---|---|

| Cost | High-end LiDAR systems are expensive, though solid-state options are reducing costs. |

| Weather Sensitivity | Heavy rain, fog, or snow can degrade data quality. |

| Limited FoV | Some LiDARs have restricted fields of view, requiring multiple sensors for full coverage. |

| Data Processing | Large point clouds demand significant computational resources. |

Best Practices & Recommendations

- Security → Encrypt LiDAR data when transmitting to cloud.

- Performance → Use edge computing to preprocess LiDAR data.

- Compliance → Ensure alignment with ISO 26262 for automotive robots.

- Automation → Integrate LiDAR SLAM updates into CI/CD pipelines for continuous improvement.

Comparison with Alternatives

| Technology | Accuracy | Cost | Best For |

|---|---|---|---|

| LiDAR | High (cm-level) | Expensive | Precise navigation, mapping |

| Radar | Medium | Moderate | Works well in bad weather |

| Camera (Vision) | High (but 2D) | Cheap | Object recognition, classification |

| Ultrasonic | Low | Very Cheap | Short-range obstacle detection |

👉 When to choose LiDAR?

- Need high accuracy maps

- RobotOps workflows demand real-time navigation

- Operating in complex, unstructured environments

Conclusion

LiDAR is a cornerstone of RobotOps, enabling robots to perceive and navigate complex environments with unparalleled accuracy. Its integration with ROS, CI/CD pipelines, and cloud platforms ensures scalability and continuous improvement in robotic systems. While challenges like cost and weather sensitivity persist, advancements in solid-state LiDAR and AI-driven processing are expanding its accessibility and capabilities. Future trends include increased adoption of solid-state LiDAR, enhanced sensor fusion, and integration with 5G for real-time data streaming.

Next Steps

- Experiment with open-source LiDAR datasets (e.g., KITTI dataset).

- Explore ROS-based SLAM frameworks like Cartographer or LOAM.

- Join robotics communities like ROS Discourse or IEEE Robotics and Automation Society.

Resources

- Official Docs: ROS Documentation, Velodyne LiDAR

- Communities: ROS Discourse, Robotics Stack Exchange